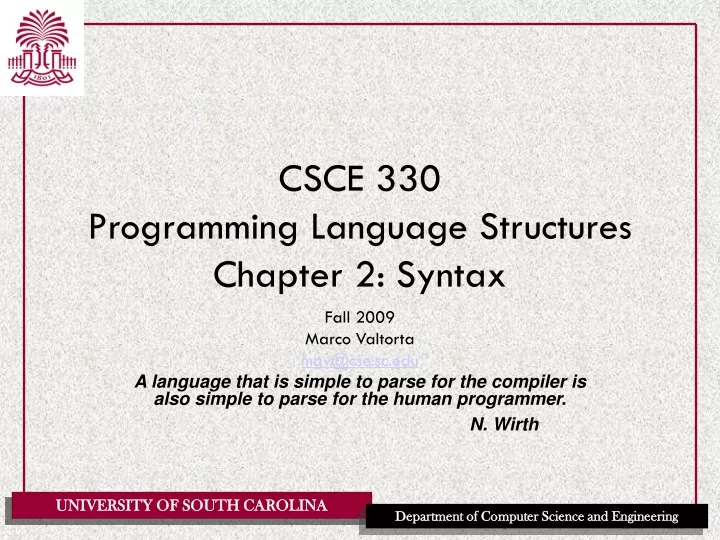

Chapter 2 :: Programming Language Syntax - ppt download

A regular expression is one of the following: Regular Expressions A regular expression is one of the following: A character The empty string, denoted by Two regular expressions concatenated Two regular expressions separated by | (i.e., or) A regular expression followed by the Kleene star (concatenation of zero or more strings) Copyright © 2005 Elsevier

Chapter 2 :: Programming Language Syntax

Programming Language Pragmatics. Michael L. Scott. Copyright © 2005 Elsevier.

Regular Expressions. A regular expression is one of the following: A character. The empty string, denoted by Two regular expressions concatenated. Two regular expressions separated by | (i.e., or) A regular expression followed by the Kleene star (concatenation of zero or more strings) Copyright © 2005 Elsevier.

Regular Expressions. Numerical literals in Pascal may be generated by the following: Copyright © 2005 Elsevier.

The notation for context-free grammars (CFG) is sometimes called Backus-Naur Form (BNF) A CFG consists of. A set of terminals T. A set of non-terminals N. A start symbol S (a non-terminal) A set of productions. Copyright © 2005 Elsevier.

Expression grammar with precedence and associativity. Copyright © 2005 Elsevier.

Parse tree for expression grammar (with precedence) for * 5. Copyright © 2005 Elsevier.

Parse tree for expression grammar (with left associativity) for Copyright © 2005 Elsevier.

Scanning. Recall scanner is responsible for. tokenizing source. removing comments. (often) dealing with pragmas (i.e., significant comments) saving text of identifiers, numbers, strings. saving source locations (file, line, column) for error messages. Copyright © 2005 Elsevier.

Scanning. Suppose we are building an ad-hoc (hand-written) scanner for Pascal: We read the characters one at a time with look-ahead. If it is one of the one-character tokens { ( ) [ ] < > , ; = + - etc } we announce that token. If it is a ., we look at the next character. If that is a dot, we announce . Otherwise, we announce . and reuse the look-ahead. Copyright © 2005 Elsevier.

Scanning. If it is a <, we look at the next character. if that is a = we announce <= otherwise, we announce < and reuse the look-ahead, etc. If it is a letter, we keep reading letters and digits and maybe underscores until we can t anymore. then we check to see if it is a reserve word. Copyright © 2005 Elsevier.

Scanning. If it is a digit, we keep reading until we find a non-digit. if that is not a . we announce an integer. otherwise, we keep looking for a real number. if the character after the . is not a digit we announce an integer and reuse the . and the look-ahead. Copyright © 2005 Elsevier.

Scanning. Pictorial representation of a Pascal scanner as a finite automaton. Copyright © 2005 Elsevier.

Scanning. This is a deterministic finite automaton (DFA) Lex, scangen, etc. build these things automatically from a set of regular expressions. Specifically, they construct a machine that accepts the language identifier | int const | real const | comment | symbol | Copyright © 2005 Elsevier.

Scanning. We run the machine over and over to get one token after another. Nearly universal rule: always take the longest possible token from the input thus foobar is foobar and never f or foo or foob. more to the point, is a real const and never 3, ., and Regular expressions generate a regular language; DFAs recognize it. Copyright © 2005 Elsevier.

Scanning. Scanners tend to be built three ways. ad-hoc. semi-mechanical pure DFA (usually realized as nested case statements) table-driven DFA. Ad-hoc generally yields the fastest, most compact code by doing lots of special-purpose things, though good automatically-generated scanners come very close. Copyright © 2005 Elsevier.

Scanning. Writing a pure DFA as a set of nested case statements is a surprisingly useful programming technique. though it s often easier to use perl, awk, sed. for details see Figure Table-driven DFA is what lex and scangen produce. lex (flex) in the form of C code. scangen in the form of numeric tables and a separate driver (for details see Figure 2.12) Copyright © 2005 Elsevier.

the next character will generally need to be saved for the next token. In some cases, you may need to peek at more than one character of look-ahead in order to know whether to proceed. In Pascal, for example, when you have a 3 and you a see a dot. do you proceed (in hopes of getting 3.14) or. do you stop (in fear of getting 3..5) Copyright © 2005 Elsevier.

In messier cases, you may not be able to get by with any fixed amount of look-ahead.In Fortr an, for example, we have DO 5 I = 1,25 loop DO 5 I = 1.25 assignment. Here, we need to remember we were in a potentially final state, and save enough information that we can back up to it, if we get stuck later. Copyright © 2005 Elsevier.

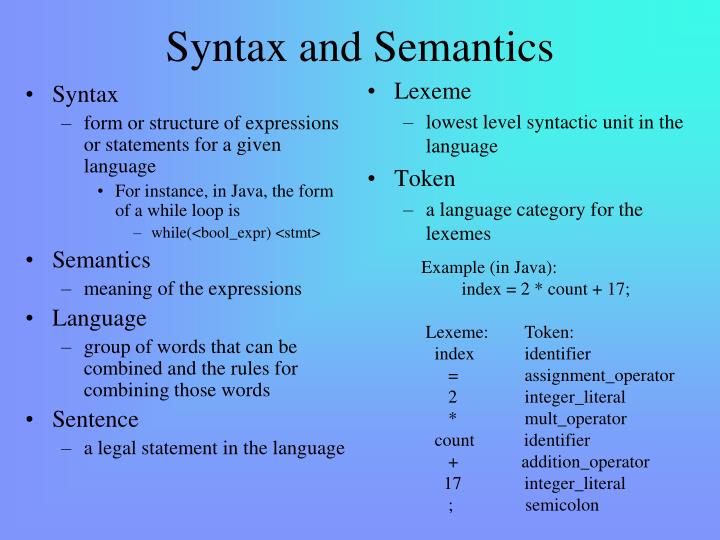

terminals (tokens) non-terminals. production. derivations (left-most and right-most - canonical) parse trees. sentential form. Copyright © 2005 Elsevier.

a parser is a language recognizer. There is an infinite number of grammars for every context-free language. not all grammars are created equal, however. Copyright © 2005 Elsevier.

It turns out that for any CFG we can create a parser that runs in O(n^3) time. There are two well-known parsing algorithms that permit this. Early s algorithm. Cooke-Younger-Kasami (CYK) algorithm. O(n^3) time is clearly unacceptable for a parser in a compiler - too slow. Copyright © 2005 Elsevier.

Parsing. Fortunately, there are large classes of grammars for which we can build parsers that run in linear time. The two most important classes are called LL and LR. LL stands for Left-to-right, Leftmost derivation . LR stands for Left-to-right, Rightmost derivation’ Copyright © 2005 Elsevier.

Parsing. LL parsers are also called top-down , or predictive parsers & LR parsers are also called bottom-up , or shift-reduce parsers. There are several important sub-classes of LR parsers. SLR. LALR. We won t be going into detail on the differences between them. Copyright © 2005 Elsevier.

Every CFL that can be parsed deterministically has an SLR(1) grammar (which is LR(1)) Every deterministic CFL with the prefix property (no valid string is a prefix of another valid string) has an LR(0) grammar. Copyright © 2005 Elsevier.

This number indicates how many tokens of look-ahead are required in order to parse. Almost all real compilers use one token of look-ahead. The expression grammar (with precedence and associativity) you saw before is LR(1), but not LL(1) Copyright © 2005 Elsevier.

LL Parsing. Here is an LL(1) grammar (Fig 2.15): program stmt list $$$ stmt_list stmt stmt_list. | stmt id := expr. | read id. | write expr. expr term term_tail. term_tail add op term term_tail. Copyright © 2005 Elsevier.

LL Parsing. LL(1) grammar (continued) 10. term factor fact_tailt. 11. fact_tail mult_op fact fact_tail. | factor ( expr ) | id. | number. add_op + | - mult_op * | / Copyright © 2005 Elsevier.

LL Parsing. Like the bottom-up grammar, this one captures associativity and precedence, but most people don t find it as pretty. for one thing, the operands of a given operator aren t in a RHS together! however, the simplicity of the parsing algorithm makes up for this weakness. How do we parse a string with this grammar by building the parse tree incrementally. Copyright © 2005 Elsevier.

LL Parsing. Example (average program) read A. read B. sum := A + B. write sum. write sum / 2. We start at the top and predict needed productions on the basis of the current left-most non-terminal in the tree and the current input token. Copyright © 2005 Elsevier.

LL Parsing. Parse tree for the average program (Figure 2.17) Copyright © 2005 Elsevier.

Table-driven LL parsing: you have a big loop in which you repeatedly look up an action in a two-dimensional table based on current leftmost non-terminal and current input token. The actions are. (1) match a terminal. (2) predict a production. (3) announce a syntax error. Copyright © 2005 Elsevier.

LL Parsing. LL(1) parse table for parsing for calculator language. Copyright © 2005 Elsevier.

for details see Figure The key thing to keep in mind is that the stack contains all the stuff you expect to see between now and the end of the program. what you predict you will see. Copyright © 2005 Elsevier.

LL Parsing. Problems trying to make a grammar LL(1) left recursion. example: id_list id | id_list , id. equivalently. id_list id id_list_tail. id_list_tail , id id_list_tail. | epsilon. we can get rid of all left recursion mechanically in any grammar. Copyright © 2005 Elsevier.

LL Parsing. Problems trying to make a grammar LL(1) common prefixes: another thing that LL parsers can t handle. solved by left-factoring example: stmt id := expr | id ( arg_list ) equivalently. stmt id id_stmt_tail. id_stmt_tail := expr. | ( arg_list) we can eliminate left-factor mechanically. Copyright © 2005 Elsevier.

there are infinitely many non-LL LANGUAGES, and the mechanical transformations work on them just fine. the few that arise in practice, however, can generally be handled with kludges. Copyright © 2005 Elsevier.

LL Parsing. Problems trying to make a grammar LL(1) the dangling else problem prevents grammars from being LL(1) (or in fact LL(k) for any k) the following natural grammar fragment is ambiguous (Pascal) stmt if cond then_clause else_clause | other_stuff. then_clause then stmt. else_clause else stmt. | epsilon. Copyright © 2005 Elsevier.

balanced_stmt if cond then balanced_stmt. else balanced_stmt | other_stuff. unbalanced_stmt if cond then stmt | if cond then balanced_stmt. else unbalanced_stmt. Copyright © 2005 Elsevier.

else goes with the closest then or. more generally, the first of two possible productions is the one to predict (or reduce) Copyright © 2005 Elsevier.

LL Parsing. Better yet, languages (since Pascal) generally employ explicit end-markers, which eliminate this problem. In Modula-2, for example, one says: if A = B then. if C = D then E := F end. else. G := H. end. Ada says end if ; other languages say fi Copyright © 2005 Elsevier.

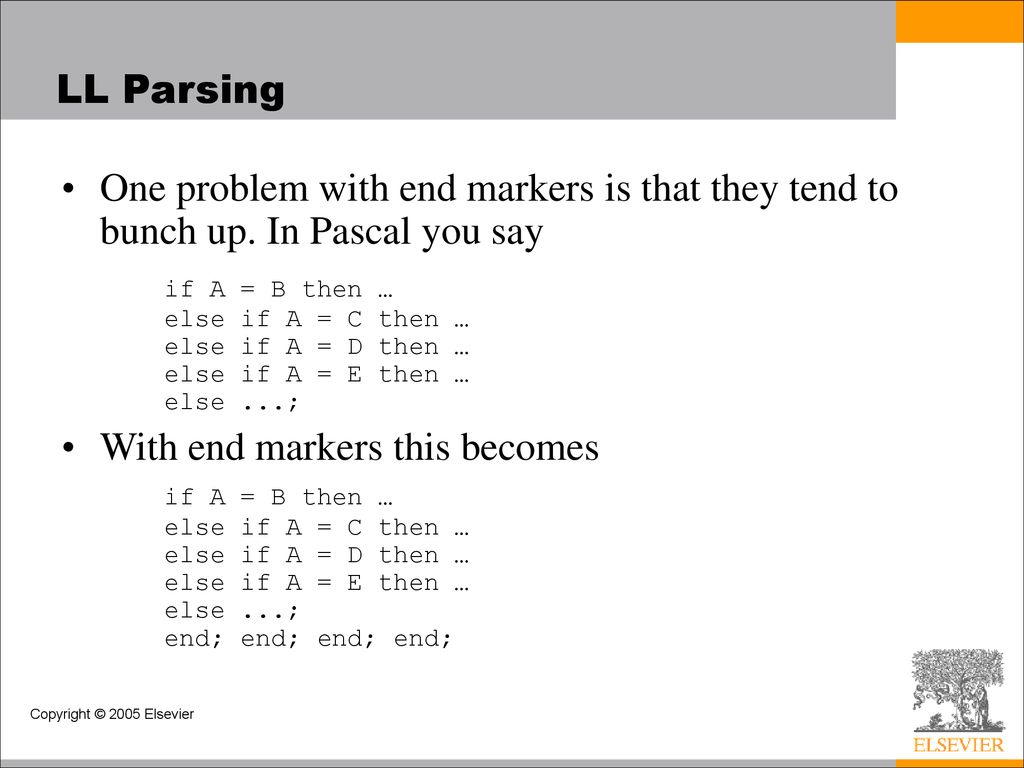

LL Parsing. One problem with end markers is that they tend to bunch up. In Pascal you say. if A = B then … else if A = C then … else if A = D then … else if A = E then … else ; With end markers this becomes. if A = B then … else if A = C then … else if A = D then … else if A = E then … else ; end; end; end; end; Copyright © 2005 Elsevier.

LL Parsing. The algorithm to build predict sets is tedious (for a real sized grammar), but relatively simple. It consists of three stages: (1) compute FIRST sets for symbols. (2) compute FOLLOW sets for non-terminals (this requires computing FIRST sets for some strings) (3) compute predict sets or table for all productions. Copyright © 2005 Elsevier.

LL Parsing. It is conventional in general discussions of grammars to use. lower case letters near the beginning of the alphabet for terminals. lower case letters near the end of the alphabet for strings of terminals. upper case letters near the beginning of the alphabet for non-terminals. upper case letters near the end of the alphabet for arbitrary symbols. greek letters for arbitrary strings of symbols. Copyright © 2005 Elsevier.

LL Parsing. Algorithm First/Follow/Predict: FIRST() == {a : * a } (if =>* THEN {} ELSE NULL) FOLLOW(A) == {a : S + A a } (if S * A THEN {} ELSE NULL) Predict (A X1 Xm) == (FIRST (X1 Xm) - {}) (if X1, , Xm * then FOLLOW (A) ELSE NULL) Details following… Copyright © 2005 Elsevier.

LL Parsing Copyright © 2005 Elsevier

LL Parsing. If any token belongs to the predict set of more than one production with the same LHS, then the grammar is not LL(1) A conflict can arise because. the same token can begin more than one RHS. it can begin one RHS and can also appear after the LHS in some valid program, and one possible RHS is Copyright © 2005 Elsevier.

LR Parsing. LR parsers are almost always table-driven: like a table-driven LL parser, an LR parser uses a big loop in which it repeatedly inspects a two-dimensional table to find out what action to take. unlike the LL parser, however, the LR driver has non-trivial state (like a DFA), and the table is indexed by current input token and current state. the stack contains a record of what has been seen SO FAR (NOT what is expected) Copyright © 2005 Elsevier.

LR Parsing. A scanner is a DFA. it can be specified with a state diagram. An LL or LR parser is a PDA. Early s & CYK algorithms do NOT use PDAs. a PDA can be specified with a state diagram and a stack. the state diagram looks just like a DFA state diagram, except the arcs are labeled with <input symbol, top-of-stack symbol> pairs, and in addition to moving to a new state the PDA has the option of pushing or popping a finite number of symbols onto/off the stack. Copyright © 2005 Elsevier.

LR Parsing. An LL(1) PDA has only one state! well, actually two; it needs a second one to accept with, but that s all (it s pretty simple) all the arcs are self loops; the only difference between them is the choice of whether to push or pop. the final state is reached by a transition that sees EOF on the input and the stack. Copyright © 2005 Elsevier.

LR Parsing. An SLR/LALR/LR PDA has multiple states. it is a recognizer, not a predictor it builds a parse tree from the bottom up. the states keep track of which productions we might be in the middle. The parsing of the Characteristic Finite State Machine (CFSM) is based on. Shift. Reduce. Copyright © 2005 Elsevier.

program stmt list $$$ stmt_list stmt_list stmt. | stmt. stmt id := expr. | read id. | write expr. expr term. | expr add op term. Copyright © 2005 Elsevier.

LR Parsing. LR grammar (continued): 9. term factor. | term mult_op factor. factor ( expr ) | id. | number. add op + | - mult op * | / Copyright © 2005 Elsevier.

LR Parsing. This grammar is SLR(1), a particularly nice class of bottom-up grammar. it isn t exactly what we saw originally. we ve eliminated the epsilon production to simplify the presentation. For details on the table driven SLR(1) parsing please note the following slides. Copyright © 2005 Elsevier.

LR Parsing Copyright © 2005 Elsevier

Shift & Reduce (for optimization) Copyright © 2005 Elsevier.

PPT - Chapter 2 Syntax PowerPoint Presentation, free download - ID

PPT - Syntax and Semantics PowerPoint Presentation, free download

How to Use ChatGPT For Making PPT? - GeeksforGeeks

CHAPTER 2: COMPONENTS OF PROGRAMMING LANGUAGE - ppt download

Slide 1 Chapter 2-b Syntax, Semantics. Slide 2 Syntax, Semantics

4 Genius Ways to Use ChatGPT To Create A PowerPoint Presentation

Chapter 5: Syntax directed translation –Use the grammar to direct

Create Stunning Presentations: 7 Best Microsoft PowerPoint Courses

Chapter 2 :: Programming Language Syntax - ppt download

Structure of programming languages - ppt video online download

PPT - Programming Languages 2nd edition Tucker and Noonan

PPT - CSCE 330 Programming Language Structures Chapter 2: Syntax

Chapter 02 php basic syntax

Words 2nd chapter- minimalist syntax